这篇文章是为了总结矩阵求导和反向传播推导的,

求导布局包括:分子布局或分母布局。

分子布局:求导结果的维度以分子为主。结果矩阵的行对应于分母(输入)变量,列对应于分子(输出)变量。例如:

∂ f 2 × 1 ( x ) ∂ x 3 × 1 T = [ ∂ f 1 ∂ x 1 ∂ f 1 ∂ x 2 ∂ f 1 ∂ x 3 ∂ f 2 ∂ x 1 ∂ f 1 ∂ x 2 ∂ f 2 ∂ x 3 ] 2 × 3 \frac{\partial {\boldsymbol{f}}_{2 \times 1}( \boldsymbol{x}) }{\partial \boldsymbol{x}_{3 \times 1}^T} =

{\left\lbrack \begin{array}{lll}

\frac{\partial f_1}{\partial x_1} & \frac{\partial f_1}{\partial x_2} &

\frac{\partial f_1}{\partial x_3} \\ \frac{\partial f_2}{\partial x_1} & \frac{\partial f_1}{\partial x_2} & \frac{\partial f_2}{\partial x_3} \end{array}\right\rbrack }_{2 \times 3}

∂ x 3 × 1 T ∂ f 2 × 1 ( x ) = [ ∂ x 1 ∂ f 1 ∂ x 1 ∂ f 2 ∂ x 2 ∂ f 1 ∂ x 2 ∂ f 1 ∂ x 3 ∂ f 1 ∂ x 3 ∂ f 2 ] 2 × 3

分母布局:求导结果的维度以分母为主。结果矩阵的行对应于分子(输出)变量,列对应于分母(输入)变量:

∂ f 2 × 1 T ( x ) ∂ x 3 × 1 = [ ∂ f 1 ∂ x 1 ∂ f 2 ∂ x 1 ∂ f 1 ∂ x 2 ∂ f 2 ∂ x 2 ∂ f 1 ∂ x 3 ∂ f 2 ∂ x 3 ] 3 × 2 \frac{\partial {\boldsymbol{f}}_{2 \times 1}^{T}\left( \boldsymbol{x}\right) }{\partial {\boldsymbol{x}}_{3 \times 1}} =

{\left\lbrack \begin{array}{ll}

\frac{\partial f_1}{\partial {x}_1} & \frac{\partial f_2}{\partial {x}_1}\\

\frac{\partial f_1}{\partial {x}_2} & \frac{\partial f_2}{\partial {x}_2}\\

\frac{\partial f_1}{\partial {x}_3} & \frac{\partial f_2}{\partial {x}_3} \end{array}\right\rbrack }_{3 \times 2}

∂ x 3 × 1 ∂ f 2 × 1 T ( x ) = ⎣ ⎢ ⎢ ⎡ ∂ x 1 ∂ f 1 ∂ x 2 ∂ f 1 ∂ x 3 ∂ f 1 ∂ x 1 ∂ f 2 ∂ x 2 ∂ f 2 ∂ x 3 ∂ f 2 ⎦ ⎥ ⎥ ⎤ 3 × 2

对于一个 n n n Y \boldsymbol{Y} Y m m m X \boldsymbol{X} X n + m n+m n + m y i y_i y i x j x_j x j ∂ y i x j \frac{\partial y_i}{x_j} x j ∂ y i

我们定义 m × n m \times n m × n X \boldsymbol{X} X f f f X \boldsymbol{X} X

∂ f ∂ X = [ ∂ f ∂ x 11 ∂ f ∂ x 21 ⋯ ∂ f ∂ x m 1 ∂ f ∂ x 12 ∂ f ∂ x 22 ⋯ ∂ f ∂ x m 2 ⋮ ⋮ ⋱ ⋮ ∂ f ∂ x 1 n ∂ f ∂ x 2 n ⋯ ∂ f ∂ x m n ] \frac{\partial f}{\partial \boldsymbol{X}} = \left\lbrack \begin{matrix} \frac{\partial f}{\partial {x}_{11}} & \frac{\partial f}{\partial {x}_{21}} & \cdots & \frac{\partial f}{\partial {x}_{m1}} \\ \frac{\partial f}{\partial {x}_{12}} & \frac{\partial f}{\partial {x}_{22}} & \cdots & \frac{\partial f}{\partial {x}_{m2}} \\ \vdots & \vdots & \ddots & \vdots \\ \frac{\partial f}{\partial {x}_{1n}} & \frac{\partial f}{\partial {x}_{2n}} & \cdots & \frac{\partial f}{\partial {x}_{mn}} \end{matrix}\right\rbrack

∂ X ∂ f = ⎣ ⎢ ⎢ ⎢ ⎢ ⎢ ⎡ ∂ x 1 1 ∂ f ∂ x 1 2 ∂ f ⋮ ∂ x 1 n ∂ f ∂ x 2 1 ∂ f ∂ x 2 2 ∂ f ⋮ ∂ x 2 n ∂ f ⋯ ⋯ ⋱ ⋯ ∂ x m 1 ∂ f ∂ x m 2 ∂ f ⋮ ∂ x m n ∂ f ⎦ ⎥ ⎥ ⎥ ⎥ ⎥ ⎤

矩阵上的重要的标量函数包括矩阵的迹和行列式行列式。

考虑向量对标量的求导。假设我们有一个函数

f ( x ) = [ a 1 x , a 2 x 2 ] T \boldsymbol{f}\left( x\right) = {\left\lbrack a_1x,a_2{x}^{2}\right\rbrack }^{T}

f ( x ) = [ a 1 x , a 2 x 2 ] T

,对于向量的各个元素,我们可以简单地应用标量对标量求导的知

∂ f ∂ x = [ a 1 , 2 a 2 x ] T \frac{\partial \boldsymbol{f}}{\partial x} = {\left\lbrack a_1,2a_2x\right\rbrack }^{T}

∂ x ∂ f = [ a 1 , 2 a 2 x ] T

在向量微积分中,向量 y \boldsymbol{y} y x x x y \boldsymbol{y} y

向量函数(分量为函数的向量) f ( y ) = [ y 1 , y 2 , … , y n ] T \boldsymbol{f}\left( y\right) = {\left\lbrack {y}_{1},{y}_{2},\ldots ,{y}_{n}\right\rbrack }^{T} f ( y ) = [ y 1 , y 2 , … , y n ] T x = [ x 1 , x 2 , … , x n ] T \boldsymbol{x} = {\left\lbrack x_1,x_2,\ldots ,{x}_{n}\right\rbrack }^{T} x = [ x 1 , x 2 , … , x n ] T

∂ y ∂ x = [ ∂ y 1 ∂ x 1 ∂ y 1 ∂ x 2 ⋯ ∂ y 1 ∂ x n ∂ y 2 ∂ x 1 ∂ y 2 ∂ x 2 ⋯ ∂ y 2 ∂ x n ⋮ ⋮ ⋱ ⋮ ∂ y n ∂ x 1 ∂ y n ∂ x 2 ⋯ ∂ y n ∂ x n ] \frac{\partial \boldsymbol{y}}{\partial \boldsymbol{x}} = \left\lbrack \begin{matrix} \frac{\partial {y}_{1}}{\partial x_1} & \frac{\partial {y}_{1}}{\partial x_2} & \cdots & \frac{\partial {y}_{1}}{\partial {x}_{n}} \\ \frac{\partial {y}_{2}}{\partial x_1} & \frac{\partial {y}_{2}}{\partial x_2} & \cdots & \frac{\partial {y}_{2}}{\partial {x}_{n}} \\ \vdots & \vdots & \ddots & \vdots \\ \frac{\partial {y}_{n}}{\partial x_1} & \frac{\partial {y}_{n}}{\partial x_2} & \cdots & \frac{\partial {y}_{n}}{\partial {x}_{n}} \end{matrix}\right\rbrack

∂ x ∂ y = ⎣ ⎢ ⎢ ⎢ ⎢ ⎢ ⎡ ∂ x 1 ∂ y 1 ∂ x 1 ∂ y 2 ⋮ ∂ x 1 ∂ y n ∂ x 2 ∂ y 1 ∂ x 2 ∂ y 2 ⋮ ∂ x 2 ∂ y n ⋯ ⋯ ⋱ ⋯ ∂ x n ∂ y 1 ∂ x n ∂ y 2 ⋮ ∂ x n ∂ y n ⎦ ⎥ ⎥ ⎥ ⎥ ⎥ ⎤

在向量微积分中,向量函数 y \boldsymbol{y} y x \boldsymbol{x} x

矩阵函数 Y \boldsymbol{Y} Y x x x 切矩阵 ,可写成

∂ Y ∂ x = [ ∂ y 11 ∂ x ∂ y 12 ∂ x … ∂ y 1 n ∂ x ∂ y 21 ∂ x ∂ y 22 ∂ x … ∂ y 2 n ∂ x ⋮ ⋮ ⋱ ⋮ ∂ y n 1 ∂ x ∂ y n 2 ∂ x … ∂ y n n ∂ x ] \frac{\partial \boldsymbol{Y}}{\partial x} = \left\lbrack \begin{matrix} \frac{\partial {y}_{11}}{\partial x} & \frac{\partial {y}_{12}}{\partial x} & \ldots & \frac{\partial {y}_{1n}}{\partial x} \\ \frac{\partial {y}_{21}}{\partial x} & \frac{\partial {y}_{22}}{\partial x} & \ldots & \frac{\partial {y}_{2n}}{\partial x} \\ \vdots & \vdots & \ddots & \vdots \\ \frac{\partial {y}_{n1}}{\partial x} & \frac{\partial {y}_{n2}}{\partial x} & \ldots & \frac{\partial {y}_{nn}}{\partial x} \end{matrix}\right\rbrack

∂ x ∂ Y = ⎣ ⎢ ⎢ ⎢ ⎢ ⎡ ∂ x ∂ y 1 1 ∂ x ∂ y 2 1 ⋮ ∂ x ∂ y n 1 ∂ x ∂ y 1 2 ∂ x ∂ y 2 2 ⋮ ∂ x ∂ y n 2 … … ⋱ … ∂ x ∂ y 1 n ∂ x ∂ y 2 n ⋮ ∂ x ∂ y n n ⎦ ⎥ ⎥ ⎥ ⎥ ⎤

从严格的数学定义来看, y \boldsymbol{y} y W \boldsymbol{W} W y \boldsymbol{y} y m m m W \boldsymbol{W} W m × n m \times n m × n m × m × n m \times m \times n m × m × n L L L W \boldsymbol{W} W ∂ L ∂ W \frac{\partial L}{\partial \boldsymbol{W}} ∂ W ∂ L

∂ L ∂ W = ∑ i = 1 m ∂ L ∂ y i ∂ y i ∂ W \frac{\partial L}{\partial \boldsymbol{W}} = \sum_{i = 1}^m\frac{\partial L}{\partial y_i}\frac{\partial y_i}{\partial \boldsymbol{W}}

∂ W ∂ L = i = 1 ∑ m ∂ y i ∂ L ∂ W ∂ y i

其中 ∂ L ∂ y i \frac{\partial L}{\partial y_i} ∂ y i ∂ L ∂ y i ∂ W \frac{\partial y_i}{\partial \boldsymbol{W}} ∂ W ∂ y i m × n m \times n m × n y i y_i y i W i j W_{ij} W i j y = W x + b \boldsymbol{y} = \boldsymbol{W}\boldsymbol{x} + \boldsymbol{b} y = W x + b

y i = ∑ k = 1 n W i k x k + b i ⇒ ∂ y i ∂ W j k = x k δ i j = { x k if i = j 0 else y_i = \sum_{k=1}^n W_{ik} x_k + b_i \\

\Rightarrow \frac{\partial y_i}{\partial W_{jk}} = x_k\delta_{ij} =

\left\{ \begin{array}{l}

x_k \quad \text{if } i=j\\

0 \quad \text{else}\\

\end{array} \right.

y i = k = 1 ∑ n W i k x k + b i ⇒ ∂ W j k ∂ y i = x k δ i j = { x k if i = j 0 else

这意味着在实际计算中,∂ y i ∂ W \frac{\partial y_i}{\partial \boldsymbol{W}} ∂ W ∂ y i i i i i i i

∂ y i ∂ W j k = x k δ i j ⇒ ∂ L ∂ W j k = ∑ i = 1 m ∂ L ∂ y i ∂ y i ∂ W j k = ∂ L ∂ y i x k \frac{\partial y_i}{\partial W_{jk}} = x_k\delta_{ij} \\

\Rightarrow \frac{\partial L}{\partial W_{jk}} = \sum_{i = 1}^m \frac{\partial L}{\partial y_i}\frac{\partial y_i}{\partial W_{jk}} = \frac{\partial L}{\partial y_i}x_k

∂ W j k ∂ y i = x k δ i j ⇒ ∂ W j k ∂ L = i = 1 ∑ m ∂ y i ∂ L ∂ W j k ∂ y i = ∂ y i ∂ L x k

观察 ∂ L ∂ W j k \frac{\partial L}{\partial W_{jk}} ∂ W j k ∂ L j j j k k k ∂ L ∂ y , x \frac{\partial L}{\partial \boldsymbol{y}}, \boldsymbol{x} ∂ y ∂ L , x

∂ L ∂ W = ∂ L ∂ y x T \frac{\partial L}{\partial \boldsymbol{W}} = \frac{\partial L}{\partial \boldsymbol{y}}\boldsymbol{x}^T

∂ W ∂ L = ∂ y ∂ L x T

从此出可以看出梯度矩阵的秩是很低的,意味着在某些情况下,模型中可能存在冗余参数。同时低秩梯度矩阵可能会导致优化过程中的平坦区域(plateaus),使得梯度下降算法收敛速度变慢。这是因为当梯度矩阵接近低秩时,Hessian矩阵(二阶导数矩阵)可能会变得病态(ill-conditioned)

由于低秩矩阵的特殊结构,可以在不损失太多精度的情况下对它们进行近似处理,比如通过奇异值分解(SVD)保留主要成分。可以用来加速训练过程

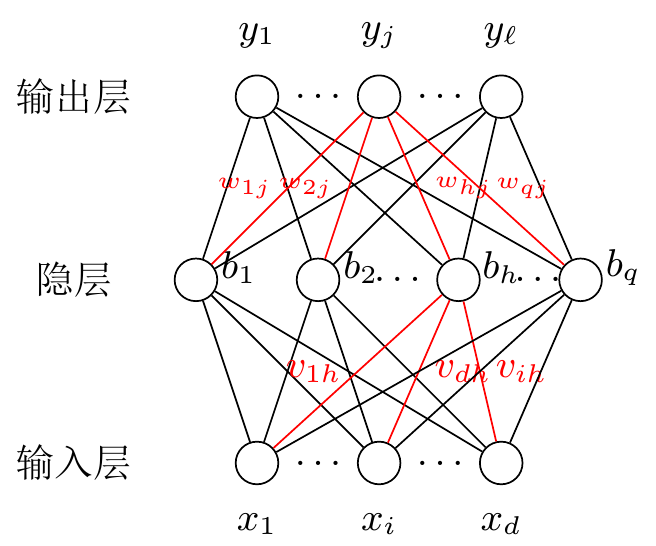

记上面的输入层的输入为 x \boldsymbol{x} x f f f b \boldsymbol{b} b

b = f ( V x + b 0 ) y = W b + b 1 \boldsymbol{b} = f(\boldsymbol{Vx} + \boldsymbol{b}_0) \\

\boldsymbol{y} = \boldsymbol{Wb} + \boldsymbol{b}_1 \\

b = f ( V x + b 0 ) y = W b + b 1

其中 b 0 , b 1 \boldsymbol{b}_0, \boldsymbol{b}_1 b 0 , b 1

d x T d x = I d x T A d x = A d A x d x = A T d x A d x = A T ∂ u T v ∂ x = ∂ u T ∂ x v + ∂ v T ∂ x u T ∂ u v T ∂ x = ∂ u ∂ x v T + u ∂ v T ∂ x d x T x d x = 2 x d x T A x d x = ( A + A T ) x ∂ u T X v ∂ X = u v T ∂ u T X T X u ∂ X = 2 X u u T ∂ [ ( X u − v ) T ( X u − v ) ] ∂ X = 2 ( X u − v ) u T \frac{d \boldsymbol{x}^T}{d \boldsymbol x} = I \quad

\frac{d \boldsymbol{x^T A}}{d \boldsymbol x} = \boldsymbol A\\

\frac{d \boldsymbol {Ax}}{d \boldsymbol x} = {A}^T\quad \frac{d \boldsymbol {xA}}{d \boldsymbol x} = \boldsymbol A^T \\

\frac{\partial \boldsymbol {u}^T \boldsymbol v}{\partial \boldsymbol x} = \frac{\partial \boldsymbol {u}^T}{\partial \boldsymbol x}\boldsymbol v + \frac{\partial \boldsymbol {v}^T}{\partial \boldsymbol x}\boldsymbol{u}^T \\

\frac{\partial \boldsymbol u{v}^T}{\partial \boldsymbol x} = \frac{\partial \boldsymbol u}{\partial \boldsymbol x}\boldsymbol{v}^T + \boldsymbol u\frac{\partial \boldsymbol {v}^T}{\partial \boldsymbol x} \\

\frac{d \boldsymbol{x^Tx}}{d \boldsymbol x} = 2\boldsymbol x\quad \frac{d \boldsymbol{x^TAx}}{d \boldsymbol x} = ( \boldsymbol {A + A^T}) \boldsymbol x \\

\frac{\partial \boldsymbol {u^T Xv}}{\partial \boldsymbol X} = \boldsymbol {uv}^T

\quad \frac{\partial \boldsymbol {u^TX^TXu}}{\partial \boldsymbol X} = \boldsymbol{2Xuu}^T \\

\frac{\partial [\boldsymbol {( Xu - v)^T( Xu - v)} ] }{\partial \boldsymbol X} = 2(\boldsymbol{Xu - v}) \boldsymbol u^T

d x d x T = I d x d x T A = A d x d A x = A T d x d x A = A T ∂ x ∂ u T v = ∂ x ∂ u T v + ∂ x ∂ v T u T ∂ x ∂ u v T = ∂ x ∂ u v T + u ∂ x ∂ v T d x d x T x = 2 x d x d x T A x = ( A + A T ) x ∂ X ∂ u T X v = u v T ∂ X ∂ u T X T X u = 2 X u u T ∂ X ∂ [ ( X u − v ) T ( X u − v ) ] = 2 ( X u − v ) u T

推导的时候不用记太死板,只需要记住布局规则和行序列序就足够了 ,例如:

( d x T A d x ) i j = d ( x T A ) j d ( x ) i = d ∑ k = 1 n x k A k j d x i = A i j ⇒ d x T A d x = A \left(\frac{d \boldsymbol{x^T A}}{d \boldsymbol x}\right)_{ij} =\frac{d (\boldsymbol{x^T A})_j}{d \boldsymbol (x)_i}

=\frac{d \sum_{k=1}^n x_kA_{kj} }{d x_i}= A_{ij}\\

\Rightarrow \frac{d \boldsymbol{x^T A}}{d \boldsymbol x} =\boldsymbol A

( d x d x T A ) i j = d ( x ) i d ( x T A ) j = d x i d ∑ k = 1 n x k A k j = A i j ⇒ d x d x T A = A

einsum 我们思考为什么上面的标量 y i y_i y i W \boldsymbol{W} W ∂ y i ∂ W \frac{\partial y_i}{\partial \boldsymbol{W}} ∂ W ∂ y i

下面我们去推导更加一般的结论,由于矩阵乘法是 einsum 的一种特例,我们直接对 einsum 总结一般性规律。我们定义基础算子为:对某一维度进行对应元素相乘并求和 ,那么矩阵乘法就是进行广播-基础算子-转置的结果,那么如果 einsum 对 2 2 2

1 2 'b i j k, j k -> b i' , A, B)

那么虽然 C \boldsymbol{C} C A , B \boldsymbol{A,B} A , B 6 , 4 6,4 6 , 4 A \boldsymbol{A} A A \boldsymbol{A} A 因此计算 A \boldsymbol{A} A 2 2 2 :

∂ L ∂ A b i j k = ∑ b i ( ∂ L ∂ C b i ∂ C b i ∂ A b i j k ) C b i = ∑ j k A b i j k B j k ⇒ ∂ L ∂ A b i j k = ∂ L ∂ C b i ⋅ B j k \frac{\partial L}{\partial \boldsymbol{A}_{bijk}} = \sum_{bi}\left( \frac{\partial L}{\partial \boldsymbol{C}_{bi}}\frac{\partial \boldsymbol{C}_{bi}}{\partial \boldsymbol{A}_{bijk}}\right) \\

\boldsymbol{C}_{bi} = \sum_{jk} \boldsymbol{A}_{bijk}\boldsymbol{B}_{jk} \\

\Rightarrow \frac{\partial L}{\partial \boldsymbol{A}_{bijk}} = \ \frac{\partial L}{\partial \boldsymbol{C}_{bi}} \cdot \boldsymbol{B}_{jk}

∂ A b i j k ∂ L = b i ∑ ( ∂ C b i ∂ L ∂ A b i j k ∂ C b i ) C b i = j k ∑ A b i j k B j k ⇒ ∂ A b i j k ∂ L = ∂ C b i ∂ L ⋅ B j k

因此可以推出 A \boldsymbol{A} A

1 dL_dA = torch.einsum('b i, j k' , dL_dC, B)

记法说明:记上面的输入层的输入为 x \boldsymbol{x} x f f f b \boldsymbol{b} b

b = f ( V x + b 0 ) y = W b + b 1 \boldsymbol{b} = f(\boldsymbol{Vx} + \boldsymbol{b}_0) \\

\boldsymbol{y} = \boldsymbol{Wb} + \boldsymbol{b}_1 \\

b = f ( V x + b 0 ) y = W b + b 1

其中 b 0 , b 1 \boldsymbol{b}_0, \boldsymbol{b}_1 b 0 , b 1

损失函数对 W \boldsymbol{W} W

∂ L ∂ W = ∂ L ∂ y ∂ y ∂ W = ∂ L ∂ y b T \frac{\partial L}{\partial \boldsymbol{W}} = \frac{\partial L}{\partial \boldsymbol{y}}\frac{\partial \boldsymbol{y}}{\partial \boldsymbol{W}} = \frac{\partial L}{\partial \boldsymbol{y}}\boldsymbol{b}^T

∂ W ∂ L = ∂ y ∂ L ∂ W ∂ y = ∂ y ∂ L b T

损失函数对 b 1 {\boldsymbol{b}}_1 b 1

∂ L ∂ b 1 = ∂ L ∂ y ∂ y ∂ b 1 = ∂ L ∂ y \frac{\partial L}{\partial \boldsymbol{b}_1} = \frac{\partial L}{\partial \boldsymbol{y}}\frac{\partial \boldsymbol{y}}{\partial \boldsymbol{b}_1} = \frac{\partial L}{\partial \boldsymbol{y}}

∂ b 1 ∂ L = ∂ y ∂ L ∂ b 1 ∂ y = ∂ y ∂ L

对于输入层到隐藏层的连接 ( V 和 b 0 ) \left( {\boldsymbol{V}\text{ 和 }{\boldsymbol{b}}_0}\right) ( V 和 b 0 ) b \boldsymbol{b} b

∂ L ∂ b = ∂ L ∂ y ∂ y ∂ b = W T ∂ L ∂ y \frac{\partial L}{\partial \boldsymbol{b}} = \frac{\partial L}{\partial \boldsymbol{y}}\frac{\partial \boldsymbol{y}}{\partial \boldsymbol{b}} = {\boldsymbol{W}}^T\frac{\partial L}{\partial \boldsymbol{y}}

∂ b ∂ L = ∂ y ∂ L ∂ b ∂ y = W T ∂ y ∂ L

现在我们可以计算 V \boldsymbol{V} V b 0 {\boldsymbol{b}}_0 b 0 f f f

∂ L ∂ ( V x + b 0 ) = ∂ L ∂ b ⊙ f ′ ( V x + b 0 ) \frac{\partial L}{\partial \left( {\boldsymbol{V}\boldsymbol{x} + {\boldsymbol{b}}_0}\right) } = \frac{\partial L}{\partial \boldsymbol{b}} \odot {f}^{\prime }( {\boldsymbol{V}\boldsymbol{x} + {\boldsymbol{b}}_0})

∂ ( V x + b 0 ) ∂ L = ∂ b ∂ L ⊙ f ′ ( V x + b 0 )

○ 损失函数对 V \boldsymbol{V} V

∂ L ∂ V = ( ∂ L ∂ b ⊙ f ′ ( V x + b 0 ) ) x T \frac{\partial L}{\partial \boldsymbol{V}} = \left( {\frac{\partial L}{\partial \boldsymbol{b}} \odot {f}^{\prime }\left( {\boldsymbol{V}\boldsymbol{x} + {\boldsymbol{b}}_0}\right) }\right) {\boldsymbol{x}}^T

∂ V ∂ L = ( ∂ b ∂ L ⊙ f ′ ( V x + b 0 ) ) x T

损失函数对 b 0 {\boldsymbol{b}}_0 b 0

∂ L ∂ b 0 = ∂ L ∂ b ⊙ f ′ ( V x + b 0 ) \frac{\partial L}{\partial {\boldsymbol{b}}_0} = \frac{\partial L}{\partial \boldsymbol{b}} \odot {f}^{\prime }\left( {\boldsymbol{V}\boldsymbol{x} + {\boldsymbol{b}}_0}\right)

∂ b 0 ∂ L = ∂ b ∂ L ⊙ f ′ ( V x + b 0 )